Thirteen gold ides

This is a live electronics and live computer graphics piece from 1991.

Parallel

Parallel processes were a challenge from my earliest programming steps. The computers of the 1980s worked with one processor and were therefore serial. If you required speed or some performance, you had to work in pure machine language. Yes, there were developments such as the parallel processing plug-in card the 'Transputer', but they were far beyond my reach. So in this project, I chose to use living thinking systems instead of programming them myself. The animals I would use would then each separately take care of their part of the parallel process. Moreover, by bringing the animals together in one area, interactions would take place whose results I would only have to collect. I would then follow them with a video camera and use a machine language program to scan their movements and convert them into MIDI information for the connected music equipment.

Flies and fish

For these biological systems I had first thought of a bunch of flies. In spite of all my efforts to collect these 'flies', this was not a success. They were much too fast for the electronics to follow. Also, the glass container that I had made was much too small for these insects to interact with. I then released them and came up with the idea of using small fish. Gold ides This was the right choice.

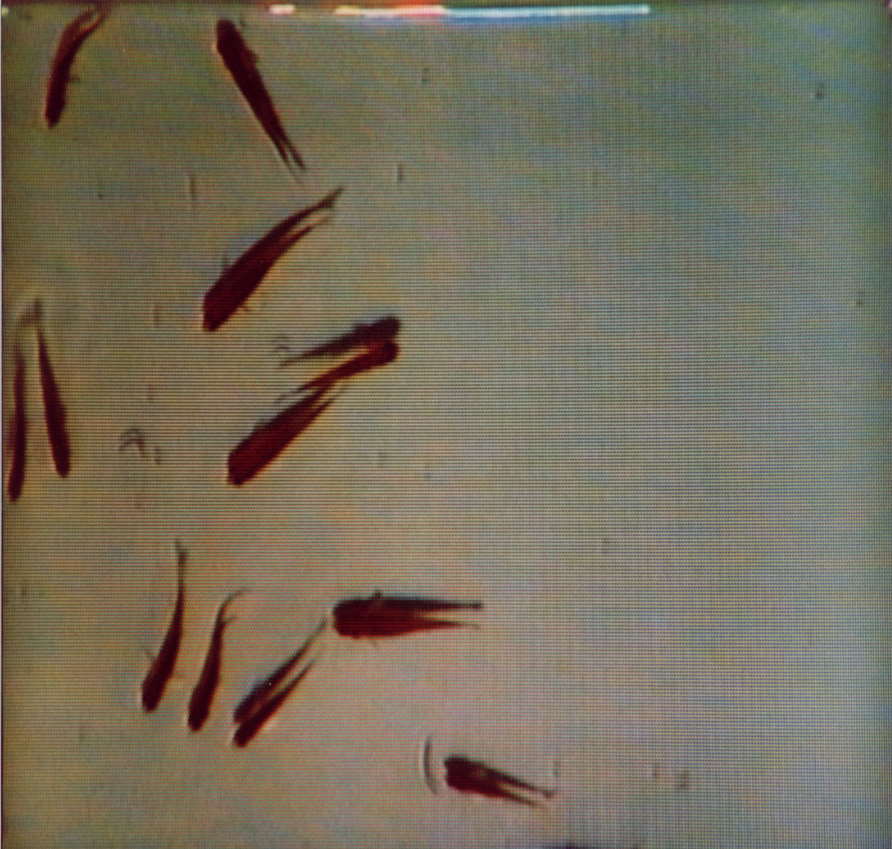

In preparation for the performance, a video camera was set up perpendicularly above an aquarium in which the gold ides were swimming. Although technically speaking it would be no problem to let these fish "perform" live during the performance, the image was recorded on a video recorder. The fish would otherwise not survive due to stress and lack of oxygen.

From bio to electro

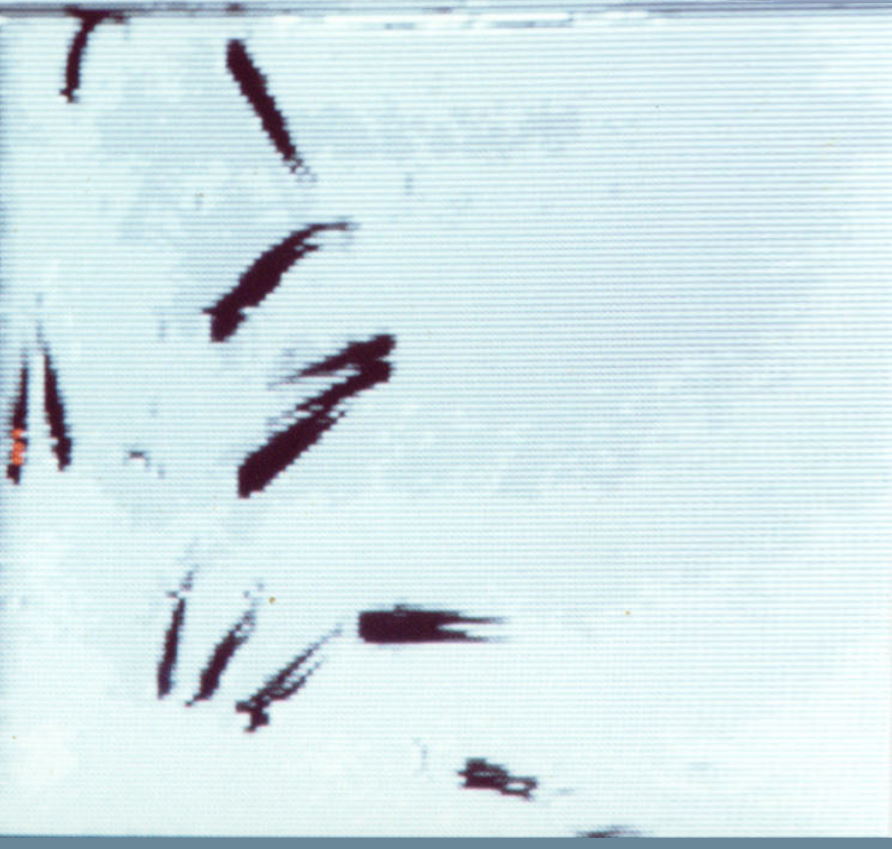

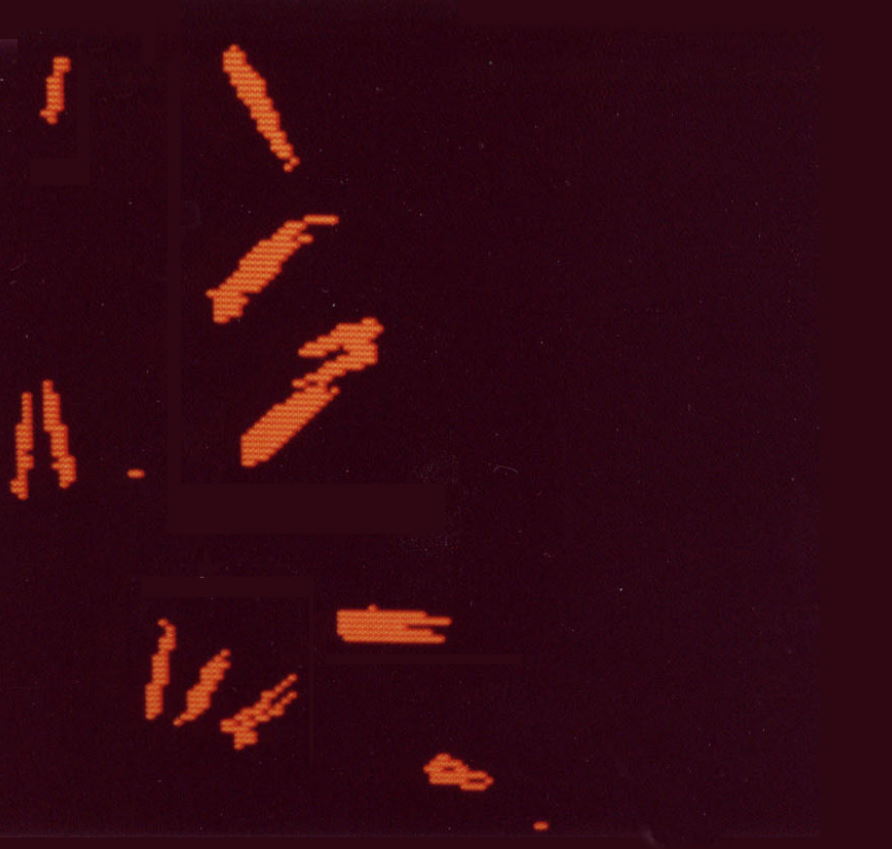

The image signal was sent from the VCR to a 'digitizer' which passed on digitized data in real-time to the ARM (Acorn Risc Machine). (Incidentally: now 20 years later this chip in an updated version is still widely used in portable devices such as smartphones) The image was set at 1/4 of the image area to obtain a 4 times faster image refresh and processing. The colour palette was also reduced to two colours: red and black. This evened out the colour differences caused by the water and shadows.

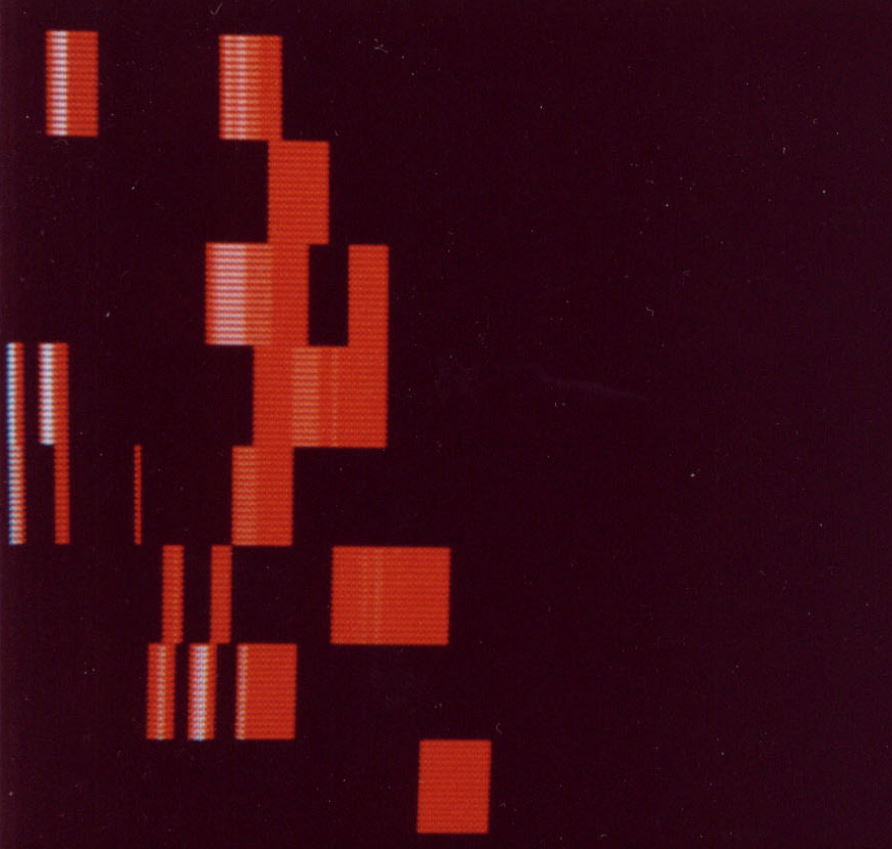

Ten times a second the image was converted by a machine language program into blocks which represented the 'weight' of fish in a certain place. This then produced a third image whose position and colour (the whiter the more fish) was converted into MIDI data. A sampler with eight separate channels positioned the sound in the quadraphonic sound picture and also determined from the information received which fragments from the eight sound samples should be played as a loop. The vertical position of the fish determined which sound sample was used. As time went on, the loops became shorter and thus more abstract and higher in tone.

The Performance. The Video.

The video shown here is the video that was sent to the computer and processed by the machine language program and converted to midi-exclusive codes for the sampler. The audience saw this video. On the computer screen, however, the projected images were as shown in the photographs. Afterwards, I made a copy of this video and added the music.

laatste update: 2021.06.06